HOPE not hate uses cookies to collect information and give you a more personalised experience on our site. You can find more information in our privacy policy. To agree to this, please click accept.

Last Sunday morning, a US man posted a video of himself on Facebook announcing his intent to commit murder. Two minutes later he posted another video showing him shooting and killing an elderly man.

This isn’t the first time someone has used Facebook to publicise extreme acts or post hateful content. Justin Osofsky, a vice president of Facebook, said in a public post that the company knew it had to “do better” to stop content like this from being seen and shared.

Facebook is one of several social media giants grappling over its role in policing content. There is rising pressure for these platforms to act against extreme content. But the limits of today’s technology, the global nature of the internet and the ethics of free speech have led to (very) mixed results.

Facebook, Twitter and Google executives were grilled by the UK’s Home Affairs select committee last month on their inability to effectively monitor online abuse and hate crime, despite the billions they earned. The executives were told their companies had “a terrible reputation” for dealing swiftly with content and were committing “commercial prostitution”.

This comes after more than 250 major brands reportedly pulled advertising from YouTube, owned by Google, after revelations that their ads were being placed next to extremist or hateful content. Writing to Google, MPs said the company was “profiting from hatred’.

Germany has recently cracked down on hate speech by threatening social media giants with fines of up to €50 million if they fail to promptly remove offensive posts. Chancellor Angela Merkel’s cabinet believes companies such as Facebook and Twitter are not doing enough to remove content that breaks German law. Despite the fact that it holds some of the strongest hate speech laws in the world, online hate has become a mounting problem in Germany, coinciding with a recent rise in the number of asylum-seekers.

The nature of modern online advertising means companies often don’t know where their ads are running.

Following the mounting pressure, Google announced a series of measures to tackle the issue, which included brands having more control over where their ads appeared, and better oversight of those ads. But Google UK managing director Ronan Harris said in a statement that the huge volume of advertising meant that Google didn’t “always get it right”.

“In a very small percentage of cases, ads appear against content that violates our policies,” he said. YouTube alone gets 400 hours of video uploaded to the platform every minute and it’s a challenge to keep certain content away from advertisements.

Checking every piece of content would be “humanly impossible”, Tim Jordan, professor of Digital Cultures at the University of Sussex admits. “But they’ve put huge resources in robot cars and glasses that have invaded everyone’s privacy – are they putting the appropriate kinds of resource in this kind of system?”

Users can and do flag up inappropriate material for the platforms to take down but the process is inefficient and too slow. “Tech companies are just not quick enough to take [hate content] down, it has taken them weeks sometimes to react,” says Stupples.

The slow response is especially dangerous because social media posts or videos often reach their widest audience within a few hours.

But machines that are as capable as humans in differentiating between a truly offensive post and something posted as sarcasm or criticism do not exist yet according to the tech giants. Machines can more easily identify images: which is why child pornography is easier to pick out than interpreting extremist content.

Mark Zuckerberg, founder of Facebook, said his company was working on artificial intelligence “to tell the difference between news stories about terrorism and actual terrorist propaganda” to be able to take action. However, he added that this was “technically difficult, as it requires building AI that can read and understand news”.

A spokesperson for the Community Security Trust, which advises the Jewish community on security and anti-Semitism, said that social media companies have improved removing inappropriate content or banning users who engage in hate speech, but that there were no clear guidelines.

“Rates of removal are inconsistent – what might be removed one day due to violating the social media platform’s community guidelines, may not be removed the next day,” he said.

Removing hateful content on Twitter is especially difficult as there is no system to prevent a person creating multiple accounts. Trolls and users whose accounts are blocked because of excessive abuse can immediately create a new account to continue spewing hate. Sometimes those banned move over to other lesser-known social media networks.

Another challenge for tech giants is the evolving nature of hate sites and the fact that they often appear benign at the start. David Stupples, professor of networked electronic and radio systems at City University, explains that many sites start by just producing material with bias and that, by the time extreme or hateful content is posted and seen, the damage is already done.

“It’s difficult to find an evolving site but once it has passed the red line, we should be able to get those sites quickly,” he said. The real challenge for Stupples is how “you know you’re approaching the red line but it’s still ethical” and he believes there is a lack of clear definition of when content should be policed.

Fiyaz Mughal, founder of Tell MAMA, an organisation that records anti-Muslim attacks, believes the platforms should be more policed.

“When social media [platforms] repeatedly fail to act because there is no economic pressure on them, the government has to intervene,” he says. Mughal explained that of the threatening material Tell MAMA flagged on Twitter, only about 10% was taken down.

Both Tell MAMA and the CST have worked with the police to track the evolving language of hate speech and of the terms used to conceal insults against certain minority groups. The CST spokesperson says that many of the code words are terms “employed by the Alt-Right, which have been co-opted by anti-Semites on social media to refer to Jews.”

Others, however, worry that new laws policing the internet would limit freedom of speech. “It does go into areas of censorship,” says Jordan. “People are concerned about trolling and hate speech, but there have been up sides… The internet provides means by which people can speak in ways they hadn’t been able to in other contexts.”

Allowing users to flag content raises difficulties for moderators policing the online platforms. Last year, a Facebook moderators took down a Pulitzer-winning photo from the Vietnam war depicting a naked girl running from a napalm strike. After criticisms, Facebook reposted the photo.

Another complication has been the global nature of the platforms where hate and abuse is spewed. In the US, legislation prevents platforms owners from being held legally responsible for content unless asked to remove something by law.

The legislation in the UK on malicious communications was last amended in 2003 and Mughal says the existing laws are not equipped to handle the changed online landscape the UK is faced with today. He believes for things to change positively, tech giants must work more closely with the UK government.

“There is a fundamental failure in the business model, there was no thinking of safety and security – essentially a couple of young dudes in San Francisco created a platform and sent it out to the world,” he says.

The Home Office has tried several times to get tech companies to agree to work more closely with law enforcement. But companies like Google and Facebook, that have roots in the US and consider themselves accountable to a vast community of users, are not as concerned as UK-based companies about their reputation among British MPs.

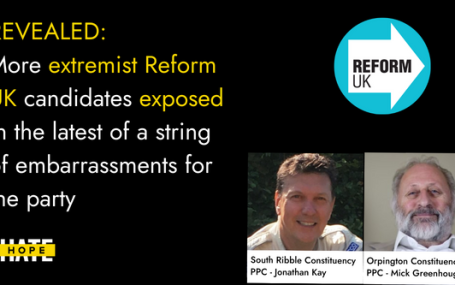

HOPE not hate reveals two more extremist candidates from Reform UK, in the latest of a string of embarrassments for the party UPDATE: Just hours…