HOPE not hate uses cookies to collect information and give you a more personalised experience on our site. You can find more information in our privacy policy. To agree to this, please click accept.

This report is the first step in a new research project by HOPE not hate that aims to develop our understanding of how far-right Telegram chats could help drive radicalisation and the spread of far-right narratives.

Telegram has been connected to several cases of planned violence and terrorism convictions in the UK. Recently Ashley Podsiad-Sharp, the leader of White Stag Athletics Club and an associate of nazi terror group National Action was convicted to an eight year prison sentence for having disseminated terrorist material on Telegram. The judge who sentenced Podsiad-Sharp said he had used a Telegram group as “camouflage” to recruit “ignorant and disillusioned men” and incite them to violence. Earlier this year, Luca Benincasa was convicted for his engagement in the Feuerkrieg Division (FKD) which is a proscribed group. Benincasa activism was almost solely on Telegram.

The many cases similar to this one have put a spotlight on Telegram as an important space for far-right radicalisation. The report develops a method to identify individuals that are key in the spreading of hateful ideology in far-right Telegram chats. We use a combination of deep learning and analysis of user activity to assess the potential for interactions between users to spread far-right narratives.

Our metric takes into account if a user has expressed any of 13 different far-right topics, interactions with content that express these topics and whether they go on to express it after interacting with the content. The method enables us to surface users who are disproportionately likely to influence other users in terms of taking up far-right ideas.

We analyse over 3 million Telegram messages from 12 public far-right chats and identify two types of impactful users which we call influencers and reinforcers. Our scoring system orders users on a sliding scale from most to least effective but we identify these groups around the top 1% of users.

Our metric shows that influencers have an unusually high probability in posting messages that lead to the take up of new far-right ideas among other users. Other users engage with their message at a much higher rate than the average user. They tend to incorporate more narratives into their messages, tying multiple forms of hate into single frames. We argue that this is an effective way to entrench and expand someone’s far-right views.

The impact of reinforcers on the other hand comes primarily from the tenacity of their engagement. They tend to send disproportionately many messages containing simpler narratives but the amount of messages, combined with stature in the community also lead to meaningful engagement with their content. Their messages contain fewer topics and they therefore tend to not spread new narratives to other users but repeat narratives already held by many.

This method has direct practical implications. It can enable monitoring organisations to more effectively counter radicalisation by identifying the most effective spreaders of hate, platforms like Telegram to intervene and it helps build knowledge of how influential users communicate to others. This report applies the approach to Telegram but it can generalise to other platforms as well.

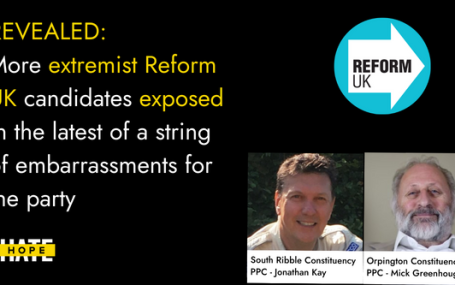

HOPE not hate reveals two more extremist candidates from Reform UK, in the latest of a string of embarrassments for the party UPDATE: Just hours…