HOPE not hate uses cookies to collect information and give you a more personalised experience on our site. You can find more information in our privacy policy. To agree to this, please click accept.

Last month, a killer filmed himself gunning down a 74-year-old man in the US, while in Thailand a man broadcasted himself killing his baby daughter and then committing suicide – the videos remained available on Facebook Live for hours after.

Now the increasing number of suicide, rape and murder clips, as well as posts inciting violence or spewing hate, has put pressure on social media giants to act.

Mark Zuckerberg, Facebook CEO, announced on Wednesday that 3,000 moderators would be hired over the year to review inappropriate content alongside the 4,500 moderators already employed.

“These reviewers will also help us get better at removing things we don’t allow on Facebook like hate speech and child exploitation,” he said.

This comes after the UK Home Affairs Committee called for Facebook and other social media giants to pay for investigations into crimes over their networks, as they had failed to remove illegal content when asked to do so. These included “dangerous terrorist recruitment material, promotion of sexual abuse of children and incitement to racial hatred”.

“The biggest companies have been repeatedly urged by Governments, police forces, community leaders and the public, to clean up their act, and to respond quickly and proactively to identify and remove illegal content. They have repeatedly failed to do so,” the Committee said.

Fiyaz Mughal, founder of Tell MAMA, an organisation that records anti-Muslim attacks, has struggled to get flagged hateful content off social media platforms. He told HOPE not hate that Facebook “is one of the best out of all of them, they have really tried to engage.”

The social media giant has said it receives millions of complaints objecting to content every week from its nearly two billion active users. When material on the platform is flagged, moderators need to quickly determine if it violates the law or the company’s conditions. Their slow response speed has been criticised in the past.

David Stupples, Professor of Networked Electronic and Radio Systems at City University, says reaction times of companies after content is reported needs to improve. “My criticism is that they react don’t fast enough but my sympathy is that they can’t monitor everything.”

The Community Security Trust (CST), which advises the Jewish community on security and anti-Semitism, has welcomed Facebook’s decision to hire more staff to monitor violent and illegal posts.

Zuckerberg has also promised to simplify reporting of content to the company and easier to contact law enforcement for reviewers if someone needs help.

Twitter and Google are not hiring additional moderators, but did announce they are taking measures to combat online hate and violent content more effectively. During an online hate summit last month, Nick Pickles, Twitter’s head of policy for the UK, discussed banning users and using time-outs as a method of deterrence for those posting abuse that didn’t reach criminal levels.

However, other companies on the internet have hampered efforts to tackle online hate. Cloudflare, an internet company based in the US, provides services to neo-Nazi sites like The Daily Stormer, according to ProPublica.

The Daily Stormer is one of many racist websites that uses Cloudflare to speed up content delivery and defend against attacks. The Daily Stormer regularly calls women whores and blacks inferior. Cloudflare has stated in the past it will not censor any website, no matter how offensive or hateful the content. But it also turns over to hate sites the personal information of people who criticise their content.

Mathew Prince, Cloudflare’s CEO, wrote in 2013 that removing users posting hate wouldn’t remove the content from the internet, it would only slow its performance and make it more vulnerable to attack.

“We do not believe that “investigating” the speech that flows through our network is appropriate. In fact, we think doing so would be creepy…There is no imminent danger it creates and no provider has an affirmative obligation to monitor and make determinations about the theoretically harmful nature of speech a site may contain,” he wrote.

But ProPublica has revealed this has led to campaigns of harassment against critics. “I wasn’t aware that my information would be sent on. I suppose I, naively, had an expectation of privacy,” said Jennifer Dalton to ProPublica, after he had complained that The Daily Stormer was asking its readers to harass Twitter users following the election.

The debate between hateful content and free speech has existed long before the internet came into being – and is not likely to end soon. The UK Government has been trying to increase the online accountability of internet companies for some time.

Facebook’s hiring of moderators, which will shorten their response time to violent content, shows their willingness to act. But getting other global internet companies to remove unequivocally dangerous or inappropriate content is also a challenge for the UK, as their global nature will only complicate the already controversial task.

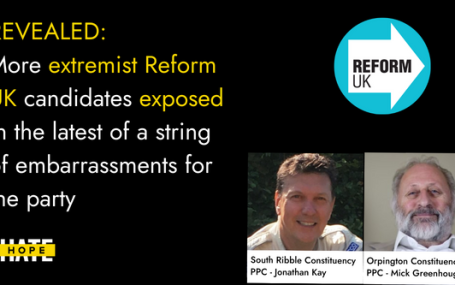

HOPE not hate reveals two more extremist candidates from Reform UK, in the latest of a string of embarrassments for the party UPDATE: Just hours…