HOPE not hate uses cookies to collect information and give you a more personalised experience on our site. You can find more information in our privacy policy. To agree to this, please click accept.

Ever since major social media platforms became ubiquitous in modern society debates about their obligation to remove hate speech and hateful individuals has raged.

When discussing deplatforming the far right, there are essentially two questions to address. The first is more philosophical, regarding whether we ethically should engage in it. This can lead us to consider its effect on radicalisation and violence, as well as broader issues such as its impact on free speech and the health of public discourse. The second question is more concrete, and asks whether deplatforming as a tactic actually works in the fight against online hate and its dangerous real-world effects.

With these in mind, one can broadly separate the opponents of online deplatforming into three camps: those who oppose it merely because they and their ideological kin are the victims of it (and often ignore or actively celebrate when it befalls their enemies); those who see it as an infringement upon free speech and open debate; and those who simply believe it does not work as a tactic.

Many of the most outspoken opponents fall into the first camp, though little is to be gained from examining at length their self-pitying and hyperbolic accusations of politically motivated pogroms. White nationalist YouTuber Colin Robertson (AKA Millennial Woes) has fearfully bemoaned “the massive purge” of he and his like-minded activists from YouTube, while far-right conspiracy theorist Paul Joseph Watson accused Facebook of issuing a ‘fatwa” against him.

Then there are those who oppose deplatforming due to their belief that it curtails free speech. This group can be split further, into those on the far right who do so disingenuously and hypocritically, and the liberals with more genuine concerns about the eroding of the right to freedom of expression, even for those they fundamentally disagree with.

For the far right, deplatforming from social media is emblematic of the wider ‘war’ on freedom of speech and the supposed sacrificing of their rights at the altar of political correctness. Scratch the surface though and it becomes evident that for many it is not a right, but merely a tactic.

With their ideas long marginalised from the mainstream, they are using the notion of free speech to try and broaden the ‘Overton Window’ (the range of ideas the public is willing to consider and potentially accept) to the point where it includes their prejudiced and hateful politics.

Take for example the hypocrisy of Milo Yiannopoulos, who organised a ‘Free Speech Week’ at the University of Berkeley, California in 2017 despite having called for the banning of Glasgow University’s Muslim Students Association. He openly conceded at a talk in New Mexico in 2017:

“I try to think of myself as a free speech fundamentalist, I suppose the only real objection, and I haven’t really reconciled this myself, is when it comes to Islam. […] I struggle with how freely people should be allowed to preach that particular faith [Islam] in this country”.

For many such as Yiannopoulos free speech should be universal except for those they dislike. However, it would be wrong to argue that all those who oppose deplatforming on the basis of free speech do so disingenuously or out of self-interest. Many earnestly cite John Milton’s Areopagitica, George Orwell’s 1984, parrot misattributed Voltaire quotes or offer selective readings of John Locke.

Nick Cohen of The Guardian is an archetypal example of this old liberal position, arguing in favour of the “principle that only demagogues who incite violence should be banned” and stating that “censors give every appearance of being dictatorial neurotics, who are so frightened of their opponents that they cannot find the strength to take them on in the open” and “see no reason to treasure free debate”. He rather glibly argues that:

“If you can’t beat a bigot in argument, you shouldn’t ban them but step aside and make way for people who can. It’s not as if they have impressive cases that stand up to scrutiny.”

Leaving aside the complexity of how one defines ‘incitement to violence’, the argument that the best way to defeat white supremacists or fascists is to simply expose their bankrupt arguments – often phrased as “sunlight is the best disinfectant” – relies on a series of false assumptions. Most untenable is the oft-repeated notion that debate inevitably leads to greater understanding: the “truth will out” in the words of Shakespeare. Another is that diversity of opinion always leads to the attainment of the truth, and yet another is that the correct argument will always win if debated.

It would be wonderful if these idealised descriptions of discussion always rang true, but this optimism ignores the possibility that ill-informed opinions will flood the debate and that ‘he who shouts the loudest’ will end up drowning out others.

In reality the ‘marketplace of ideas’ can often signal little about the quality or value of the speech being sold. These arguments look even less tenable when applied to the febrile online world with its trolling and ‘pile on’ cultures. One also has to explain how nearly a century of “sunlight” on far-right ideas has yet to “disinfect” them, and begs the question how many more people have to die in terrorist attacks such as those in Poway, Christchurch and El Paso until someone finally manages to comprehensively debate white supremacy out of existence.

Though the philosophical arguments against deplatforming are often based on untenable assumptions, one still has to ask whether it actually works as a tactic in the fight against hate. The usual such arguments against deplatforming are neatly summarised by Nathan Cofnas, a philosophy DPhil candidate at the University of Oxford, in an article for the reactionary libertarian website Quillette. In it he argues:

Banning people from social media doesn’t make them change their minds. In fact, it makes them less likely to change their minds. It makes them more alienated from mainstream society, and, as noted, it drives them to create alternative communities where the views that got them banned are only reinforced. Banning people for expressing controversial ideas also denies them the opportunity to be challenged.

He adds that:

Firstly, banning people or censoring content can draw attention to the very person or ideas you’re trying to suppress. […] Secondly, even when banning someone reduces his audience, it can, at the same time, strengthen the audience that remains. […] Thirdly, any kind of censorship can create an aura of conspiracy that makes forbidden ideas attractive.

Others also make the legitimate argument that pushing extremists to marginal platforms makes it harder for civil society groups and law enforcement to monitor them. While Twitter is open, channels and discussion groups on apps like Telegram or dark web forums are often much harder to find and keep an eye on.

The trade-off between different types of platforms is one that those on the more extreme end of the far right are already well aware: what scholars Bennett Clifford and Helen Christy Powell have called the “online extremists’ dilemma”, which is the lack of platforms that allow extremists to both recruit “potential new supporters” and maintain “operational security”. Many critics of deplatforming summarise this as “forcing them underground”, the idea that kicking them off open platforms makes it much harder to find and actually combat them.

Understanding these criticisms is important, but so too is avoiding caricatures of the pro-deplatforming position. Few, if any, are simply arguing for the deplatforming of the far right from mainstream platforms and then ignoring them on smaller or more secret platforms. The difficulties that arise from extremists migrating to other platforms is well understood, yet the decision to continue to push for deplatforming is made on a cost/benefit analysis.

If we conceptualise mainstream platforms like Facebook and Twitter as recruitment platforms, where extremists can meet and engage possible new recruits, while smaller platforms like Gab and Telegram can facilitate inter-movement collaboration, discussion and even planning, we still conclude that the benefits of reducing their ability to propagate hate and recruit people outweighs the challenges faced by monitoring them on marginal and more secure platforms.

In addition – and lack of consideration for the following point is a core indictment of liberal anti-deplatforming critics – it starves extremists of victims to target online, which is also an important advantage of deplatforming.

Clifford and Powell suggest a strategy of “marginalisation” which seeks to simultaneously make it difficult for extremists to reach the public, but also maintains the possibility for law enforcement to continue to detect and monitor them. The aim of such a strategy is to “force extremists into the online extremist’s dilemma between broad-based messaging and internal security” thereby keeping “extremist narratives on the periphery by denying them virality, reach and impact.”

The success of this tactic is shown clearly in a report by researchers from the Royal United Services Institute (RUSI) and Swansea University, ‘Following the Whack-a-Mole’, which explored the impact of deplatforming on the British far-right group, Britain First.

The group had a wildly disproportionate online presence, with 1.8 million followers and 2 million likes on Facebook in March 2018, making it “the second most-liked Facebook page within the politics and society category in the UK, after the royal family.” However, its removal from Twitter in December 2017 and from Facebook in March 2018 had an enormous effect on the organisation’s influence in the UK.

As the report states, Facebook’s decision “successfully disrupted the group’s online activity, leading them to have to start anew on Gab, a different and considerably smaller social media platform.” Never able to attract large numbers of activists onto the streets, the anti-Muslim group’s astute social media operation had been successful at attracting huge numbers online, which allowed it spread masses of anti-Muslim content across the internet.

Facebook’s decision to finally act dramatically curtailed Britain First’s ability to spread hate and left it on small, marginalised platforms, with its following on Gab now just over 11,000 and similarly small – just over 8,000 – on Telegram. This has undoubtedly been a key factor in the decline of Britain First as a dangerous force in the UK.

Those who’ve been deplatformed on the far right have articulated how it has severely retarded their influence. Milo Yiannopoulos recently moaned:

“I lost 4 million fans in the last round of bans. […] I spent years growing and developing and investing in my fan base and they just took it away in a flash.”

He goes on to state how he and others have simply failed to build a following on platforms such as Telegram and Gab that are large enough to support them. “I can’t make a career out of a handful of people like that. I can’t put food on the table this way”, he explained.

He described Gab as “relentlessly, exhaustingly hostile and jam packed full of teen racists who totally dictate the tone and discussion (I can’t post without being called a pedo [sic] kike infiltrator half a dozen times)” and Telegram merely as “a wasteland”. “None of them drive traffic. None of them have audiences who buy or commit to anything,” he continued. The failure to transfer their audiences from major to minor platforms is a perennial problem of the deplatformed.

Another interesting case study is that of Stephen Yaxley-Lennon (AKA Tommy Robinson), who suffered a raft of recent deplatformings that have greatly impacted his influence.

In March 2018 Yaxley-Lennon was permanently banned by Twitter, and then in February 2019 he was banned from Facebook, where he had more than one million followers, depriving him of his primary means of communication and organising his supporters. Another major blow came on 2 April 2019 when YouTube finally acted and placed some restrictions around his channel, which resulted in his views collapsing.

Hundreds of thousands of fewer people now see his content every month, which is a huge step forward. It may have also played into the severely reduced numbers we have seen at pro-Lennon events this year. During the summer of 2018 London was witness to demonstrations in excess of 10,000 pro-Lennon supporters, while this year numbers have struggled to reach beyond a few hundred. The reasons for this are by no means monocausal, but his and his associates’ inability to spread the word about events and animate the masses beyond core supporters has clearly played a role.

Debates around deplatforming the far right from social media are, of course, complex. It would be wrong to reduce those who oppose it to being supporters of the far right, as many will have principled and well-reasoned arguments against the tactic. When it comes down to the philosophical debates regarding its effect on free speech, there will be no convincing absolutists and libertarians.

However, it is possible to value and uphold freedom of speech and expression while simultaneously calling for the removal of dangerous extremists from social media platforms. We must not confuse their right to say what they please (within the law) with their right to say it wherever they please: a right they do not have.

On the question of whether it works, the evidence that it does has now mounted to a point where it is harder and harder to oppose on empirical grounds. Arguments that “sunlight is the best disinfectant” and the idea that hate can be debated into submission increasingly sound at best idealistic, and at worst downright ignorant.

The last decade has seen far-right extremists attract audiences unthinkable for most of the postwar period, and the damage has been seen on our streets, in the polls, and in the rising death toll from far-right terrorists. Deplatforming is not straightforward, but it limits the reach of online hate, and social media companies have to do more and do more now.

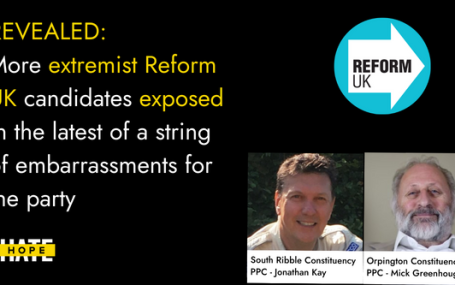

HOPE not hate reveals two more extremist candidates from Reform UK, in the latest of a string of embarrassments for the party UPDATE: Just hours…